<<<

Chronological Index

>>> <<<

Thread Index

>>>

RE: [gnso-wpm-dt] Introduction to draft Work Prioritization model

- To: "Liz Gasster" <liz.gasster@xxxxxxxxx>, <gnso-wpm-dt@xxxxxxxxx>

- Subject: RE: [gnso-wpm-dt] Introduction to draft Work Prioritization model

- From: "Gomes, Chuck" <cgomes@xxxxxxxxxxxx>

- Date: Sat, 21 Nov 2009 21:26:04 -0500

Great work Liz and Ken. In my opinion this is very constructive for the

prioritization task in front of us. I strongly suggest that we

specifically discuss this approach as at least one option for

consideration. If anyone wants to discuss a different approach as well,

I am fine with that but it would be helpful if it is described

adequately to be able to compare with the approach described below. I

also expect that we can work together to make additional improvements to

this proposed methodology or any others we consider. In that regard,

see the comments I inserted below that we can discuss further on our

call on Monday.

Chuck

________________________________

From: owner-gnso-wpm-dt@xxxxxxxxx

[mailto:owner-gnso-wpm-dt@xxxxxxxxx] On Behalf Of Liz Gasster

Sent: Friday, November 20, 2009 3:42 PM

To: gnso-wpm-dt@xxxxxxxxx

Subject: [gnso-wpm-dt] Introduction to draft Work Prioritization

model

Work Prioritization Team:

As a way to help bring everyone to the same level on the GNSO

Work Prioritization project, I have attempted to consolidate various

emails and organize our latest thinking into a single document.

Again, this is a suggested draft starting place offered by staff and the

group is encouraged to modify it as you feel appropriate. There are

three sections as follows:

1) Recommended construct and methodology (see also attached

spreadsheet)

2) Draft definitions for two dimensions

3) Procedural questions to be considered

1) Recommended construct and methodology

For this effort, Staff is envisioning a two dimensional matrix

or chart (X,Y) to help the GNSO Council graphically depict its work

prioritization. This concept is based on having each discrete project

rated on two dimensions: Value/Benefit (Y axis) and Difficulty/Cost (X

axis). Section 2 below outlines the preliminary draft definitions for

each dimension (or axis), so we will concentrate in this section on what

the chart means, how it would be produced, and the rating/ranking

methodology including sample instructions.

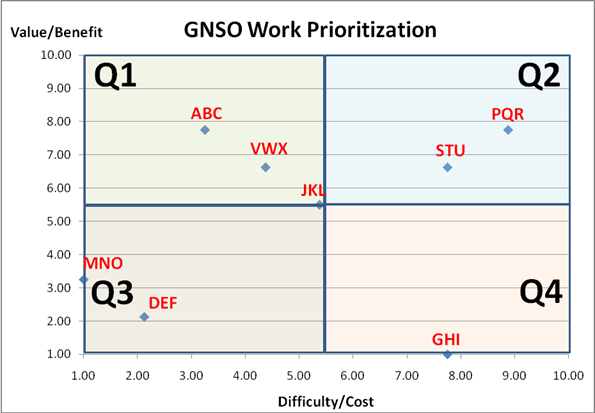

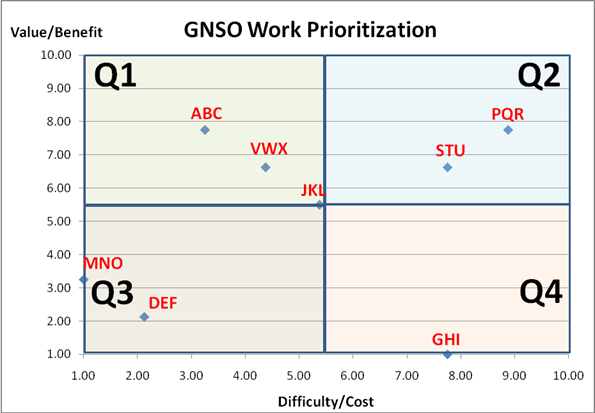

Illustration: The chart below shows 8 illustrative projects

(simply labeled ABC, DEF, GHI, etc.) plotted on two dimensions:

Value/Benefit (Y axis) and Difficulty/Cost (X axis).

[Gomes, Chuck] I have no idea whether you anticipated this or

not, but it seems to me that a project naming scheme of three (or at

most four) uppercase letters could be very useful in at least a couple

ways: 1) It is concise so that multiple projects could be mapped on the

matrix; 2) It seems like it might be possible to develop meaningful

project identifiers that everyone can readily recognize without

constantly going back to a legend (e.g., PED for PEDNR, IRTB for IRTP

part B PDP).

In this sample depiction, Q1, Q2, Q3, and Q4 represent four

quadrants which are drawn at the midpoints of each axis (arbitrarily set

to 10).

[Gomes, Chuck] Note that the axes values are not calibrated so

that the quadrants are divided at actual midpoints but that is an easy

fix. Also, I think that the starting point should be zero instead of 1.

First, that helps solve the midpoint problem but it also allows for a

project to be rated as having no value, which theoretically could be

some rater's opinion. So I recommend that we consider changing the

values on each axis to range from 0 to 10 with the midpoint (quadrant

divider) at 5.

Thinking about Value/Benefit versus Difficulty/Cost, Q1 includes

those projects that have the highest value and lowest cost;

[Gomes, Chuck] I am not sure we should ever consider cost

alone. In most cases it is probably likely that cost and level of

difficulty may go hand in hand but that might not always be the case.

It seems possible that a project could be very expensive to do but not

very difficult because it requires high cost resources while at the same

time being very straightforward to perform. From another perspective, a

project may not cost too much but it may not be practically doable. So

I would suggest that we always pair cost and difficulty together while

at the same time understanding that one factor (cost or difficulty) may

predominant in different circumstances. A project that has both high

cost and high difficulty would probably be rated higher on that axis

than one that had high cost but low difficulty.

whereas, Q4 would contain projects with the lowest value and

highest cost. Project ABC, in this example, is ranked 3.25 on

Difficulty and 7.75 in Value; therefore, it is located squarely in Q1.

Conversely, project GHI, is rated 7.75 on Difficulty, but only 1.00 on

Value and is thereby placed in Q4.

How do the projects end up with these individual X, Y

coordinates that determine their placement on the chart?

There are several options for rating/ranking individual

projects. We will look specifically at two alternatives below:

Rating Alternative A:

One option is to ask each Council member, individually and

separately, to rate/rank each project on both dimensions. Even with

this alternative (and B following), there are different methods

possible, for example, (1) place a ranking from 1 to n for each project

under each column, or (2) use something a bit simpler, e.g. High,

Medium, and Low to rate each project relative to the others. Since it

is arguably easier to rate each project as H, M, or L versus ranking

them discretely from 1 to n, we will illustrate the former approach

here. Keep in mind that an ordinal ranking methodology would simply

substitute a number (from 1 to 8 in our example) instead of the letters

H, M, or L.

Directions: Rate each project on a scale of HIGH, MEDIUM, or

LOW for each dimension (Value/Benefit, Difficulty/Cost), but keep in

mind that the rating should be relative to the other projects in the

set. There are no fixed anchors for either dimension, so raters are

asked to group projects as LOW, MEDIUM, or HIGH compared to each other.

A HIGH ranking on Value simply means that this project is perceived to

provide significantly greater benefit than projects ranked as MEDIUM.

[Gomes, Chuck] Whether we use high/medium/low or a numerical

rating scheme, I think it would be very helpful to develop some

guidelines to facilitate rating and to hopefully improve consistency

across raters. The guidelines could identify factors that may be

considered. For the value/benefit metric, factors could include things

like these: % of overall community benefited; seriousness of the problem

being solved; etc. For the cost/difficulty metric, factors could

include things like these: length of time needed; availability of

resources; etc. These are just initial ideas that need to be developed

further.

If there are 20+ raters, we could provide a simple blank matrix

and ask them to provide their individual scorings. For example, assume

that the matrix below is one individual's ratings for all 8 illustrative

projects:

PROJECT

VALUE/BENEFIT

DIFFICULTY/COST

ABC

L

H

DEF

L

M

GHI

H

L

JKL

M

M

MNO

L

L

PQR

H

H

STU

M

M

VWX

M

L

Once we have all results submitted (could be simple Word, Excel,

or even Text Email) from all individual raters, Staff would convert each

LOW to a Score of 1, MEDIUM = 5.5, and HIGH = 10 (see attached

spreadsheet, Rankings tab). We would then average the rankings for

all raters and produce a chart as shown in the attached spreadsheet (see

Summary tab). Note: We only used 4 raters in the spreadsheet for

illustrative purposes, but it is trivial to extend to as many raters as

we decide to involve.

[Gomes, Chuck] I personally think that more differentiation

would be helpful than H/M/L would provide and hence lean more favorably

toward a numerical system. This is even more true in my opinion if we

are asking raters to compare projects when doing their rating.

Rating Alternative B:

Instead of asking each Council member to rate/rank each project

individually, the Council could use a grouping technique (sometimes

referred to as "DELPHI"). For example, suppose we set up 4 teams based

upon existing Stakeholder Group structures as follows:

Team1: CSG = 6

Team2: NCSG = 6

Team3: RySG (3) + RrSG (3) = 6

Team4: Others (NCA, Liaison) = 4-5

[Gomes, Chuck] Note that the scoring values above strongly favor

the Noncontracted party house. We would need to carefully develop such

a rating method to maintain the balance between the houses and that

would add a level of complexity.

Using this approach, we would have 4 small teams and we would

ask for a single CONSENSUS score sheet from each one (whether ordinally

ranked or rated H, M, or L).

[Gomes, Chuck] Ordinal ranking might not result in accurate

values because it forces comparisons among projects that might not be a

good representation of value or difficulty or both. That would in turn

impact the placement of projects on the matrix.

Then, we would average those results to produce the overall

chart (similar to the example in the spreadsheet). We should make it

clear that we are discouraging teams from individually rating and

averaging their own results. The benefit, from this modified DELPHI

approach, is that individuals (especially new Council members) can learn

from each other and develop, collectively, what they think the most

appropriate answer should be.

[Gomes, Chuck] It seems to me that we could encourage

collaboration in ranking whether we use this approach or not. In fact,

whichever way we go, we might want to hold 30 minute overview sessions

with Q&A for each project. Raters would only need to participate if

they were not familiar with a project at their sole discretion.

The above methodologies are subject to further discussion.

Ultimately, the Council will need to decide:

1) What work prioritization construct should be utilized

(we have suggested a simple two dimensional Risk/Cost vs. Value/Benefit

displayed in a four quadrant model)?

2) How should it be executed, e.g. participation, consensus

ranking (Delphi), individual ratings averaged, etc.?

[Gomes, Chuck] I think we need to keep as simple as possible

while still maximizing the meaningfulness of the results. For that

reason I initially favor rating alternative A over B but am open to

further debate.

2) Draft definitions for the X, Y dimensions

Staff proposes the following starting definitions for the axes

in this conceptual model.

X - Difficulty/Cost ... this dimension relates to perceptions of

complexity (e.g. technical), intricacy (e.g. many moving parts to

coordinate), lack of cohesion (e.g. many competing interests), and,

therefore, overall cost/time to develop a recommendation.

[Gomes, Chuck] Note that you have identified rating factors that

can be used to develop rating guidelines as I suggested above. This is

good.

We could have - but chose not to - create a third axis to

indicate the amount of time required. This adds complexity and we

decided that initially we would include the concept of time into the

definition for level of difficulty.

[Gomes, Chuck] I suggest avoiding the complexity and instead

consider 'time required' as one of the factors in rating

cost/difficulty.

Y - Value/Benefit ... this dimension relates to perceptions of

benefit to ICANN and its stakeholders in terms of internet

growth/expansion, enhancing competitiveness, increasing

security/stability, and improving the user experience.

[Gomes, Chuck] These factors can be used to create rating

guidelines.

Please feel free to word-smith the above descriptions...

3) Procedural questions to be considered

Once the matrix is developed and all projects plotted, what

should the Council do with the results? This is an important question

to answer BEFORE the rating/methodology are finalized and executed.

[Gomes, Chuck] We may only be able to partially answer this

question in advance because the details of the rating results will

probably affect the answer. We should though be able to develop a

process for the exercise.

The Council should discuss and decide questions such as:

1) How often should it be exercised and/or what event

triggers an analysis?

[Gomes, Chuck] Here's my opinion: It will need to be executed

initially for all existing work ; it will need to be executed for each

new project that is considered before a final decision is made on a

project; it probably should be done at least annually for all projects

on the table at that time.

2) What decisions or outcomes are expected from the

process?

[Gomes, Chuck] Here's a start for an answer: a) The methodology

should be usable for prioritizing all current tasks and for making

decisions on new projects as they come up; b) lower priority projects

may need to be put on hold for a defined period of time or slowed down;

c) additional volunteers may need to be recruited before some projects

can be started.

Please let me know if we can provide any additional assistance

prior to and during the upcoming conference call on Monday.

[Gomes, Chuck] Another question we need to answer is whether or

not the first application of whatever methodology we decide to use

should involve all existing projects or some subset of them. To

minimize the difficult I suggest we narrow the scope by weeding out

projects that we think should proceed as is.

Thanks and regards,

Liz

<<<

Chronological Index

>>> <<<

Thread Index

>>>

|